Creativity is one of the most prized skills of the 21st century yet there is no scientific consensus on how it should be measured

Despite the broad consensus on the importance of creative thinking skills, they are still rare to find on the job market. The results of the Future Jobs Survey 2018 (1), by the World Economic Forum suggest that while creativity is on demand now, the need will only grow in the near future. They found that creativity, originality and initiative skills were ranked number 5. By 2022, they predict it will be number 3. In contrast to demand, creativity is one of the hardest skills to identify in job candidates. According to PWC’s 20th CEO Survey Global Talent study (2) from 2017, creativity is on today’s ‘Skill Battlegrounds’ as it is a very important skill but difficult to find.

The pwc study showed that creativity and innovation is a very important skill but difficult to find

Source: PwC’s 20th CEO survey, January 2017 (1,379 CEOs)

Given the demand, we should focus on fostering and enhancing these skills. For that to happen it is necessary to have a tool to assess creativity. However, given the complex nature of the concept, and despite the tremendous effort scientists have put into research, there is still no established method for creativity assessment. This proves to be a bottleneck in the process of fostering creativity.

Based on literature, assessment in the form of a battery of tests could be a viable solution.

“We argue that variety in DT [divergent thinking] assessment approaches should be used to reveal further benchmark findings, which are very useful when it comes to comparisons of competitive theories or the examination of new research topics related to DT.” (3)

“In order to effectively measure the target constructs, creativity research should ultimately seek to utilize a battery of tasks in order to capture shared variance. Intelligence researchers have benefited enormously from this approach, which has allowed them to reliably predict elusive constructs with great success.” (4)

“This implies that, when possible, it is best to use various DT indices. That conclusion fits well with theories of DT, which predict multiple rather than one single indicator.” (5)

Based on the suggested solution of using a battery of tests, and the fact that most existing studies testing divergent thinking have few participants, we are creating an online platform for studying creativity that is open for anyone to use. We expect similar participation numbers as in our Skill Lab: Science Detective game that has reached more than 20.000 players. This is significantly more participants than an initial ad hoc review of existing literature found for divergent thinking studies, which had an average number of participants around 220.

CREA is a combination of innovative games and validated tests. It is a suite of three open-ended games intended to measure creativity (novelty and value), Convergent Thinking (CT) and Divergent Thinking (DT) using rater-based and empirical methods, including algorithmic assessment. The suite also includes a battery of widely accepted DT and CT ability tests as well as variations of psychometric ability tests for general cognitive ability, including an abstract reasoning test.

1: Can a portfolio-based approach (battery of games/tests) reveal deeper insights into the constructs underlying creativity?

2: To what extent can scalable, digital measures of creativity supplement or replace traditional expert-assessed tests?

3: To what extent are aspects of creativity, such as divergent and convergent thinking, domain general or contextual?

4: To what extent does task-based creativity assessment reflect real-world applications of creativity?

5: What can we learn about the relationship between creativity and general cognitive abilities?

We, the people at ScienceAtHome and Center for Hybrid Intelligence, place great emphasis on doing research in a way that is not daunting to the population. We work to translate complex problems into games that the public can engage with, and thus contribute to solving problems in various fields of science without having a PhD degree, and while having fun.

Game-based assessment tools are gaining more and more attention in the field of psychology. They are fun and show similar validity as psychometric tests. By using games, some effects (such as test anxiety or the researcher effect) can be removed from the equation thus serving us cleaner data regarding the tested phenomenon. They also allow us to make observations that are closer to the real world phenomenon and are motivating and engaging. Plus, many people are playing digital games as part of their daily lives, so we can gather assessment information without stopping their daily activities (6).

The three mini games we use in the CREA suite are: crea.tiles, crea.shapes, and crea.blender.

crea.tiles consists of 10 tiles that can be moved around by the player, one at a time. The player must place a tile in an empty spot adjacent to the edge of another tile. They can only move tiles at the edges of the shape which would not result in any other tile ‘by itself’ without another adjacent tile. The shape is always one continuous shape.

crea.tiles. ‘O’ tiles can move to any ‘Z’ location. ‘X’ tiles cannot move.

Players can interact with the game in various different modes. They have to create as many digits, dogs or creatures as they can, in the respective modes. They are also asked to label their creations. In the “Challenge” mode, the players get a Target figure. They see four figures and their objective is to work out which figure is closest in appearance to the Target figure. Once they make their choice, they try to re-create the Target figure, starting with their chosen figure. In the “Open play” mode the players can play around as they wish, and save the figures they find interesting. In the end, they can select their best figures, which they submit to the voting pool. The voting pool consists of figures that players deemed their most interesting creations. At the end of the Open play, all players can see other players’ creations and vote which ones they find more interesting.

crea.tiles was created based on the Creative Foraging game (7, 8, 9). That game was designed to study creative exploration processes.

In crea.shapes, players are presented with two overlapping rectangles. They may resize and move both rectangles however they like. Where the two rectangles overlap, a darker shape appears. This shape is the focal shape. The players may take a snapshot of any given shape which they have created. The objective is to create as many different shapes as they can.

crea.shapes. Players create new shapes where the two rectangles overlap.

crea.shapes has been created based on the Open Creativity game, which was designed to study how creative outcomes can ensue over time (10).

crea.blender combines strengths of humans and algorithms to create unique images. In the game, players can play around with blending multiple images together in order to get new, interesting compositions. How “much” each image should contribute to the resulting picture is up to the player: each image’s weight can be adjusted using a slider underneath.

There are three modes in the game and the player has different objectives in each. In the “Creatures” mode, the task is to create as many animal-like figures as possible. In the “Challenge” mode the player gets a Target image. They see 3 sets of images and their objective is to work out which set was used to create the Target image. Once they make their choice, they try to re-create the target image with their chosen set. In the “Open play” mode the player can play around as they wish – and save the images they find most interesting. In the end, they can select three of their best pictures and submit them to the voting pool. The voting pool consists of images that players deemed as their most interesting creations. At the end of the Open play, all players can see other players’ creations and vote which ones they find most interesting.

Creature mode

Challenge mode – Selecting set

Challenge mode – Recreating target image

crea.blender is based on the project Artbreeder (11), which aims to be a new type of creative tool that empowers users’ creativity by making it easier to collaborate and explore. The main technology behind both crea.blender and Artbreeder are Generative Adversarial Networks.

Other variants of Artbreeder have been discussed here (12).

Try out Artbreeder here.

Computational creativity: A use case of hybrid intelligence

crea.blender is part of the field called Interactive Evolutionary Computation (IEC), artificial evolution guided by human direction. IEC is a collaborative process between humans and algorithms. Collaborative Interactive Evolution, which is involvement of many users in the IEC process, drives crea.blender’s system.

Algorithms are becoming increasingly prevalent in our day to day lives and there are many fields such as Human Computer Interaction (HCI), Human Computation and Hybrid Intelligence that explore the relationships between humans and algorithms.

CREA is a project of the Center for Hybrid Intelligence which focuses on using open crowd science to map the boundary between human and artificial intelligence.

Recently we developed the crea.visions, enabling the public to create powerful, thought-provoking visions of utopias and dystopias. We worked on this in collaboration with the United Nations AI for Good in order to raise awareness and increase participation in working towards reaching the SDGs.

Our project leader, Janet Rafner, was invited to AI for Good’s podcast to talk about crea.visions – the showcase of human intelligence combined with artificial intelligence to enable Artistic Intelligence.

Find out more about the project by listening to the podcast here, or watch the talk in the video.

Janet Rafner on the AI for Good podcast

Our work-in-progress paper on crea.blender was published in the CHI Play Conference 2020 series, titled crea.blender: A Neural Network-Based Image Generation Game to Assess Creativity. We write about our first pilot study which was set to investigate the user experience and behavior when interacting with crea.blender.

You can find a short, 2-minute presentation of it below.

We also have a publication on the motivation behind crea.visions titled The power of pictures: using ML assisted image generation to engage the crowd in complex socioscientific problems.

In September, 2021 our literature review on current creativity assessment methods titled Digital Games for Creativity Assessment: Strengths, Weaknesses and Opportunities has been published in the Creativity Research Journal. Here we introduce the current state of creativity assessment, and introduce the concept of stealth assessment as a potential solution.

We recently published another paper about crea.blender in the ICCC journal. This work, titled CREA.blender: a GAN based casual creator for creativity assessment discusses in more detail how GANs can be used to measure convergent and divergent thinking, and why crea.blender is a good candidate as a creativity assessment measure.

Our project leader, Janet Rafner wrote a graduate symposium paper, titled Creativity assessment games and crowdsourcing, submitted to Creativity & Cognition 2021. Here she describes CREA, which is part of her PhD work, and the promising opportunity that games provide for creativity assessment.

Divergent and convergent thinking

Researchers mostly agree on two things: that a creative product is a mixture of something being new and being useful at the same time, and that creativity involves two distinct cognitive processes: being able to come up with many diverse ideas (divergent thinking – DT), and being able to select and pursue an idea that is good and useful (convergent thinking – CT).

Currently, creativity is assessed mainly through divergent thinking test batteries such as the Torrance Test of Creative Thinking (13, 14) and Guilford’s Structure of Intellect DT tests (15). The Remote Association Test is the only widely used test for convergent thinking. Our CREA suite includes the following three tests on divergent and convergent thinking.

Measuring divergent thinking

Fluency is operationalized by the number of responses to a given stimulus.

Originality is operationalized as the uniqueness of a response to a stimulus.

Flexibility is operationalized as the uniqueness of categories of response to a stimulus.

Elaboration is operationalized through the level of detail and development of the idea.

Alternative Uses Test

Testing Divergent Thinking

Participants are asked to list as many unusual/alternative uses for different objects as they can.

Example

Prompt: List as many unusual/alternative uses for a tire as you can.

Sample responses: Hula hoop, chair, table, flower pot

DT skills are assessed through measuring flexibility, fluency, originality and elaboration.

Figural test from Torrance Test of Creative Thinking

Testing Divergent Thinking

Participants are asked to make additions to the shapes to create something meaningful.

Example

Prompt: Draw something in each circle that incorporates the circle into the drawing as a whole

Sample response:

DT skills are assessed through measuring flexibility, fluency and originality.

Remote Association Test: linguistic and visual

Testing Convergent Thinking

Participants are asked to come up with the solution that connects all three elements they are given.

Example – Linguistic

Prompt: what word can form compound words with the three stimuli words?

Cottage/swiss/cake: _______________

Cream/skate/water: _______________

Sample responses: 1. cheese, 2. ice

Example -Visual

Prompt: what co-occurs with the three stimuli images?

Sample response: water

CT skills are measured based on the number of steps and time it takes to reach a given solution.

General cognitive abilities is an umbrella term for distinct processes in the brain. Cognitive abilities are for example processing speed, memory, recall, and many more mental processes that are needed to carry out any task at hand. Creativity and general cognitive abilities seem to be relying on the same executive functions (updating, openness), though the nature of their relationship remains unclear (16). Our CREA suite includes the following three tests for assessing general cognitive abilities.

Abstract reasoning test

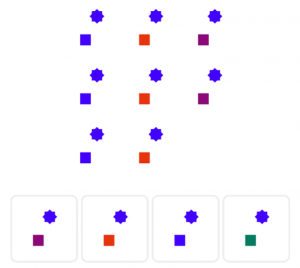

A participant is presented with a matrix of 3×3 geometric designs, with one piece missing. The participant’s task is to identify the piece, from a set of pieces, that completes the pattern in the matrix that must also be worked out. The questions and answers are all non-verbal. The test is designed to measure non-verbal, abstract reasoning. (17)

Prompt: In this task, you will be shown a 3×3 grid of patterns. The last one, in the bottom right-hand corner, is missing. You need to select which of the four possible patterns along the bottom fits into the gap.

Navon

The basic idea of Navon’s study is that when objects are arranged in groups, there are global features and local features. For example, a group of trees has local features (the individual trees) and the feature of a forest (the trees together).

The basic finding of Navon’s work is that people are faster in identifying features at the global than at the local level. This effect is also known as global precedence.

This task is designed to measure local and global processing speed.

Prompt: Decide if a letter is or contains the letter H or S.

The participant needs to press buttons corresponding to their choices.

Wisconsin Card Sorting

Participants must classify cards according to different criteria. There are four different ways to classify each card, and the only feedback is whether the classification is correct or not. One can classify cards according to the color of its symbols, the shape of the symbols, or the number of the shapes on each card. The classification rule changes every 10 cards. This implies that once the participant has worked out the rule, the participant will start making one or more mistakes when the rule changes. The task measures how well people adapt to the changing rules.

This is a test of cognitive reasoning.

Creativity is one of the essential skills for the 21st century, and schools everywhere are attempting to assess and foster creativity in their students. For example in the US, for Pre-K (age 3-5) the Figural version of Torrance Test of Creative Thinking (TTCT), Thinking Creatively in Action and Movement, Unusual Box, Alternative Use Test, and the Test for Creative Thinking – Drawing Production are typically used. For K-12 the full TTCT and the Evaluation of Potential Creativity are also used.

We also believe that creativity is an important aspect of education, hence our motivation to contribute to and make an impact on the research in the field. To illustrate the role of creativity in the big picture, we can use the Sustainable Development Goals framework developed by The Organisation for Economic Co-operation and Development (OECD). The purpose of this framework is to deliver universal goals and targets in social, economic and environmental contexts across all countries, in order to tangibly improve the lives of citizens.

According to research, the lack of understanding of complex, contextual and nuanced skills like creativity, empathy, cooperation, and other skills, all commonly referred to as 21st century skills or soft skills, has been identified as a fundamental roadblock in reaching some of the Sustainable Development Goals like ‘Quality Education’ and ‘Decent Work and Economic Growth’ (18).

With CREA, we are moving toward creating a framework allowing a citizen-based assessment and measurement of creativity. This will involve creating a collaboration between members of the public and scientists, ultimately allowing the public to co-create the project with us. We have successfully utilized this methodology in our cognitive profiling game, Skill Lab: Science Detective. More than 20.000 people have participated since Skill Lab: Science Detective launched in collaboration with Danish Broadcasting (DR) in September 2018.

Professor MSO Jacob Sherson heads up the Center for Hybrid Intelligence at Aarhus University. He is an internationally known quantum physicist who has, amongst other achievements, set the world record for quantum teleportation. He founded ScienceAtHome to create an online platform that democratizes science by turning research problems into engaging games that both capture novel solution approaches and educate citizens and students on science concepts.

Janet Rafner is an incoming doctoral researcher in hybrid intelligence at the Center for Hybrid Intelligence at Aarhus University. She was formerly a U.S. Fulbright Fellow, with degrees in physics and studio art. Her current research includes theoretical and phenomenological turbulence, Human Computer Interfaces, Research through Design, and Research-Enabling Game-Based Education. Janet is also the international coordinator for the ScienceAtHome activities associated with the global educational initiative, Think Like a Scientist.

Andrea Carugati

Webpage

Twitter Profile

Andrea Carugati is Professor of Information Systems and Associate Dean for Digitalization at Aarhus School of Business and Social Sciences at Aarhus University. Professor Carugati holds a MSc in Engineering from Chalmers University of Technology, and a PhD in Manufacturing Engineering from the Technical University of Denmark. As Associate Dean, Andrea focuses on the new competences needed by organizations moving into digitalization. Andrea’s research focuses on the strategic impact of technology, on IT driven organizational change, and on the deployment of information technology in organizations. Andrea Carugati has published, among others, in Management Information Systems Quarterly, the European Journal of Information Systems, Information Systems Journal, Database for Advances in Information Systems, European Management Review, and European Management Journal.

Lior Noy

Twitter Profile

Lior Noy is a Lecturer at the faculty of business administration at Ono Academic College (OAC). He teaches courses on creativity, innovation and AI. He holds a PhD in computer science from the Weizmann Institute of Science, with a background in cognitive psychology, computational neuroscience, and robotics, and studies creative interactions using computational tools. He is also a performer and teacher in Playback Theatre, an improvisation form based on real-life stories. Lior was the co-founder of the Theatre Lab at Weizmann Institute, a hub of research at the meeting point of physics, behavioral science and the performing arts. His current research interests include the development of computational paradigms for studying creative exploration; connecting these paradigms to different fields in cognitive sciences and creative human-agent interactions, with the long-term goal of developing a computational theory of creative collaboration.

Carsten Bergenholtz

AU Profile

LinkedIn Profile

Twitter Profile

Associate Professor Carsten Bergenholtz is a social scientist studying individual and collective problem-solving at the Department of Management at Aarhus University. Based on experiments where individuals are to solve artificial or real physics problems he tries to understand how varying personalities and cognitive abilities shape the problem-solving process. How are individuals that prioritize small changes different from individuals that go for more explorative attempts? Furthermore, what is the influence of being in a collective and seeing the behavior of others who are solving the same problem? Overall, the aim is to improve our understanding of how to organize problem-solving.

Michael Mose Biskjær

AU Profile

Michael Mose Biskjær is Assistant Professor of Digital Design at Aarhus University. By combining digital design, creativity, and innovation research, he adopts an interdisciplinary perspective to explore both theoretically and empirically the impact of digitalization on various creative practices. Among his main research interests are constraints, ideation, collaboration, and inspiration in, as well as new management methods for, creative design processes. In addition to developing courses in this field, he has recently been the driving force behind a creativity teaching package for high school and university students.

Kobi Gal

BGU Profile

AI and Data Science Lab

Professor Kobi Gal is a faculty member of the Department of Software and Information Systems Engineering at the Ben-Gurion University of the Negev, and a Reader at the School of Informatics at the University of Edinburgh. His work investigates representations and algorithms for making decisions in heterogeneous groups comprising both people and computational agents. He has worked on combining artificial intelligence algorithms with educational technology towards supporting students in their learning and teachers to understand how students learn. He has published widely in highly refereed venues on topics ranging from artificial intelligence to the learning and cognitive sciences. Gal is the recipient of the Wolf foundation’s 2013 Krill prize for young Israeli scientists, a Marie Curie International fellowship, and a three-time recipient of Harvard University’s outstanding teacher award.

Rajiv Vaid Basaiawmoit

AU Profile

Dr. Rajiv Vaid Basaiawmoit, Ph.D., MBA, is the Head of Sci-Tech Innovation & Entrepreneurship at the Faculty of Science & Technology at Aarhus University. He works with student-led innovation and entrepreneurship, interdisciplinary collaboration and gamification. He combines a multi-disciplinary academic background (PhD in Biophysics & MBA in Sustainability) with a social impact focused mindset. Rajiv advises and mentors student-driven startups and teaches Entrepreneurship & Innovation to Master and PhD level students from diverse STEM disciplines. He has previously been a jury member of a national start-up program called LaunchPad Denmark. From an academic perspective, he conducts research on entrepreneurship for STEM disciplines and using gamification to make entrepreneurship a more engaging discipline.

Kristian Tylén

AU Profile

Twitter Profile

Kristian Tylén is an Associate Professor in Cognitive Science affiliated with the Center for Semiotics and The Interacting Minds Center at Aarhus University. His main research interests include language evolution, the psycholinguistics of dialogue, collective problem solving and creativity, cognition and material culture, which he approaches from a broad range of research methods including behavioural experimentation, fMRI brain imaging, physiological measurements, statistical modeling as well as conceptual/theoretical analysis.

Sebastian Risi

Website

Twitter Profile

Associate Professor Sebastian Risi co-directs the Robotics, Evolution and Art Lab (REAL) at the IT University of Copenhagen. He is currently the principal investigator of a Sapere Aude: DFF Starting Grant (Innate: Adaptive Machines for Industrial Automation). He has won several international scientific awards, including multiple best paper awards, the Distinguished Young Investigator in Artificial Life 2018 award, a Google Faculty Research Award in 2019, and an Amazon Research Award in 2020. Recently he co-founded modl.ai, a company that develops AIs that can accelerate game development and enhance player engagement.

Lotte Philipsen

AU Profile

Associate Professor Lotte Philipsen holds a PhD in Art History and is a faculty member at the Department of Art History, Aesthetics and Culture, and Museology at Aarhus University. Her research is focused on contemporary art, aesthetic theory, and aesthetic practices related to new media and digital technology. Lotte takes particular interest in investigating how new technology is used in art in a narrow sense and in aesthetic practices in a broader sense, such as e.g. speculative design and activism. For instance, she is looking at the aesthetic potentials of computer technology, DNA-manipulation, artificial intelligence, robots, etc.

Joel Simon

Twitter Profile

I am a multidisciplinary artist and toolmaker who studied computer science and art at Carnegie Mellon University before working on bioinformatics at Rockefeller University. I am currently pursuing Morphogen, a generative design company and developing Artbreeder, a massively collaborative creative tool and network. My works are somewhere in the region between art, design and research and inspired by the systems of biology computation and creativity.

Seyedahmad Rahimi

Bio

Seyedahmad Rahimi, Ph.D., is an assistant professor of Educational Technology in the School of Teaching and Learning at the University of Florida. Dr. Rahimi’s research focuses on assessing and fostering students’ 21st-century skills (focusing on creativity) and STEM-related knowledge acquisition (focusing on physics understanding). Toward that end, Dr. Rahimi designs, develops, and evaluates immersive learning environments (e.g., educational games) equipped with stealth assessment and educational data mining and learning analytics models.

Roger Beaty is an Assistant Professor of Psychology and Director of the Cognitive Neuroscience of Creativity Lab at Penn State University. His research examines the psychology and neuroscience of creative thinking, using computational, neuroimaging, and naturalistic methods. He received the Berlyne Award for early career contributions to creativity research from the American Psychological Association. He serves on the Executive Committee of the Society for the Neuroscience of Creativity and is an Associate Editor of the Creativity Research Journal. His research on creativity has received grant support from the US National Science Foundation and the John Templeton Foundation.